As we already mentioned, one of our big challenges of 2019 was to start our front-end migration. We'll detail the steps of this migration and what we learned in this article.

Why did we want to migrate?

Our website is built on top of a big PHP monolith. We started to decouple this monolith into small microservices 2 years ago, but our web pages are still served by the monolith. The front-end is a mix of PHP views and AngularJS components.

Although AngularJS changed the front-end landscape when it got released, it is now outdated and Google abandoned the project with the release of Angular 2+.

Indeed, AngularJS has always been criticized for having a steep learning curve and having some performance issues (hello dirty checking).

AngularJS started to be an issue for various reasons:

- AngularJS code was very coupled with PHP, making it hard to reason and maintain

- Hard to recruit developers willing to work with AngularJS

- No server-side rendering (SSR)

- It is slow (first rendering and change detection)

- Big bundle size, increasing the page load time

It was clear that we needed something more modern, to improve our page load time, easier to maintain and that supports SSR.

SEO is very important for us. Although most of the search engine bots can execute JS, it takes time and it's time the bot won't spend crawling other pages (this is called crawl budget).

By using SSR, we can improve our crawl budget, so bots can crawl more pages in less time (we have hundreds of thousands of indexable pages, all available in 4 languages).

Picking the right strategy

Photo by Patrick Perkins / Unsplash

When you want to migrate your front-end to a new technology, you have different options:

- Recreate everything from scratch with the new techno and replace everything in a big-bang style

- Rewrite some parts of the pages with the new techno, until everything uses the new techno (cohabitation)

- Create a second application, and migrate pages to the new app one by one

Before going through each option, let's give more context about Hosco. Hosco is a complex website with ~1 million of users. The website contains around one hundred routes, almost all the pages are public, it's translated into 4 languages. So it's not a small website that you can rebuild in one or two months.

Rebuilding everything from scratch is rarely a good idea. It could be a good option if you have very few pages, but not in our case.

It would have meant to freeze deployments for months, and eventually deploy something after one year (or more). This is a very risky and expensive option, and we quickly discarded it.

The second option is safer, it allows you to work with iterations. But because our biggest problem was the coupling between PHP and AngularJS, adding a new techno in the middle wouldn't have helped much. Adding new techno also meant an increase in our bundle size, which was already quite big.

The third option is a mix of both. We went for this option as it was the one that suited most of our needs. We don't say it's always the best option, but it was the best for us. As we use Kubernetes, this option is easy to implement. With Ingress, you can redirect some routes to a different service easily.

We knew that the first iteration would take more time than the next ones, as we needed to rebuild the whole layout (footer, header) and all the core features (login, translation, routing, ...). But once it is deployed, we can continue to iterate quickly.

As we wanted to separate our back-end code from the front-end code, we used a separate git repository, with its own CI/CD pipelines.

The migration

We first worked on small proofs-of-concept to evaluate each techno (we were considering React, VueJS, Angular).

In the end, we decided to go with React. The learning curve is smooth, it has a strong community and it's easy to recruit React developers. The release of hooks also helped us to go for this option, as it simplified a lot of things.

We also decided to use NextJS, to simplify the SSR implementation. It is also the most popular SSR framework for React, and the documentation is quite good.

We decided to start with the migration of all our job listing pages. These pages represent a very high percentage of our indexable pages.

We also took the opportunity to completely redesign these pages, which added more time for development (but it was worth it).

It took us around 5 months to develop and deploy this first iteration. One team of 4 devs was working full-time on this project. One dev was in charge of the core features (authentication, i18n, integration with other services), while the rest of the team was focused on developing the page features (search, listing, layout, etc).

What have we learned?

Photo by Green Chameleon / Unsplash

During these 5 intensive months of development, we've learned a lot of things.

Most of the challenges were related to SSR. First of all, because it was new for all the team, and it's not something that has been tested by a lot of companies so far.

Storing the global state

In the beginning, we thought that using React context API to store the state of our pages was enough. Hooks were new and the community started to get crazy about this. We thought it would be a good idea to use this new pattern. So we split our global state in small pieces, each one using a different context.

We had something like this (simplified):

// pages/_app.tsx

class BaseApp extends App<Props> {

public static async getInitialProps({ Component, ctx }: AppContext) {

const user = await service.getUser();

const notifications = await service.getNotifications();

let pageProps;

if (Component.getInitialProps) {

pageProps = await Component.getInitialProps(ctx);

}

return { pageProps, user, notifications };

}

public render() {

const { Component, pageProps, user, notifications } = this.props;

return (

<UserProvider value={user}>

<NotificationsProvider value={notifications}>

<ThemeProvider theme={theme}>

<Component {...pageProps} />

</ThemeProvider>

</Provider>

</UserProvider>

);

}

}

For each context we also created a custom hook to return the context value, so using this state in components was very easy:

const UserCard = () => {

const user = useUser();

return <Card title={user.name} picture={user.avatar.small} />;

}

But at some point, we faced the limits of this solution... How do we access our global state from getInitialProps?

getInitialProps is an asynchronous function that returns the data needed by the page. When NextJS renders a page, it calls this function and then passes what is returned to the page component as props.

The issue is that this function is not executed in the React scope. In other terms, it doesn't know anything about the component tree or React contexts. Because it is not called inside a component, it is not possible to use hooks either.

So imagine that you store the current user in a context, and then you switch the page. How do you know who is the current user? The data is in the context, but you can't access it. You could re-fetch the user from the server every time you change the page, but that's not the point of building a SPA.

We had to admit that we needed another solution for global state management. We needed to store the state outside of React, so it could be available from getInitialProps. We decided to use Redux, as most of the team was already familiar with this library.

As Redux has hooks too (useSelector), the migration was quite easy. We didn't have to change our components, just replace the contexts with Redux state and reducers.

We ended with something similar to:

// pages/_app.tsx

class BaseApp extends App<Props> {

public static async getInitialProps({ Component, ctx }: AppContext) {

const { store } = ctx;

const user = await store.dispatch(getUser(service));

const notifications = await store.dispatch(getNotifications(service));

let pageProps;

if (Component.getInitialProps) {

pageProps = await Component.getInitialProps(ctx);

}

return { pageProps };

}

public render() {

const { Component, pageProps, store } = this.props;

return (

<Provider store={store}>

<ThemeProvider theme={theme}>

<Component {...pageProps} />

</ThemeProvider>

</Provider>

);

}

}

export default withRedux(makeStore)(BaseApp);

By using Redux, we also got access to the Redux ecosystem (redux dev tool for example, which is very powerful).

To conclude, even if the combination of React hooks and contexts is nice, it is too limited when doing SSR. We preferred to stick to the good old Redux, which is still very powerful.

Authentication

Authentication was an interesting challenge. If you work on a basic SPA, you can store the session identifier (or auth token) in various places. The most common places are in the localStorage or a cookie.

When you do SSR, you don't have the choice. You don't have access to the localStorage on the server. So you need to store the authentication data in cookies. Cookies are available on the server, and their access can be restricted with the HttpOnly and Secure flags. Cookies are sent automatically by the browser to your server for every request, which is quite convenient. You just need to pay attention to CSRF issues.

But cookies are not sent automatically when you do a request from the server (from NextJS to your API). Same for all the initial request headers (remote address, user agent, etc).

If your API relies on these headers, you will need to forward them manually. Otherwise, the API will receive your server IP and the default userAgent used by your HTTP library.

To fix this, we use a common HTTP client that we instantiate in the App getInitialProps. We pass it to the Page getInitialProps and make sure that every service uses this HTTP client.

// api/client.ts

export const createServerClient = (req: IncomingMessage): HttpClient => {

return axios.create({

responseType: 'json',

headers: {

Cookie: req.headers.cookie || '',

'User-Agent': req.headers['user-agent'],

'X-Forwarded-For': req.headers['x-forwarded-for'] || '',

// ... forward all the headers you want

},

});

};

export const createBrowserClient = (config: AxiosRequestConfig = {}): HttpClient => {

return axios.create({

responseType: 'json',

withCredentials: true, // needed to include HttpOnly cookies in the request

});

};

// pages/_app.tsx

class BaseApp extends App<Props> {

public static async getInitialProps({ Component, ctx }: AppContext) {

const { req } = ctx;

const client = req ? createServerClient(req) : createBrowserClient();

const service = new MyService(client);

const user = await service.getUser();

const pageProps = await Component.getInitialProps({ ...ctx, client });

return { user, pageProps };

}

// ....

}

Conditional rendering based on the device/cookies

It happened to us that we wanted to adapt our layout depending on the screen size or if the user was on a touch device or not.

On the client-side you are quite free, you can do that in JS from wherever you want (component, utility function, ...), as the user agent is available globally with navigator.userAgent.

On the server, the only way to access the user agent is from the request headers, which are only available in your app or page getInitialProps function.

You could also decide to not render the components that rely on a browser-specific feature or to use useEffect (which is called only on the client). The downside of these two solutions is that the user will perceive that when the page is painted. During a few milliseconds, he might see the 2 different versions of your layout (the bad one and then the good one).

This is not something we wanted, so the only solution is to pass the userAgent to the component. We decided to store it in Redux so it is available everywhere.

This is not hard to fix, it's just one more example of the "tricky" things that we might forget when we start working on one SSR project.

// pages/_app.tsx

class BaseApp extends App<Props> {

public static async getInitialProps({ Component, ctx }: AppContext) {

const { req, store } = ctx;

// We detect the platform only on the server, as it won't change accross pages

if (req) {

store.dispatch(detectPlatform(req.headers['user-agent']));

}

// ...

}

}

Optimize your getInitialProps

getInitialProps function is asynchronous. It is important to optimize this function as it will be the main blocker of your page. NextJS won't start rendering anything until getInitialProps is resolved.

When you want to add something to this function, you should always think carefully about it. Here are some questions that helped us:

Is this needed to render the page?

If the data you want to load is not necessary, it is maybe better to consider using useEffect.

Can it be executed in parallel?

You should always try to run your async functions in parallel so you don't block the rendering for too long. If you run asynchronous code in parallel, make sure you use await properly.

await asyncFn(); // -> 300ms

await otherAsyncFn(); // -> 250ms

// ---> the function will be resolved in at least 300+250 = 550ms

// you can use Promise.all() to run these functions in parallel

// the promise will be resolved in 300ms instead of 550ms (the speed of the slowest function)

await Promise.all([asyncFn(), otherAsyncFn()])

Can it be cached?

If it can, consider caching it on the service level with a simple cache library (lru-cache for example). It is very easy to cache requests on NodeJS, as the memory is shared across requests.

Does this need to be executed on the server AND the client?

Only for the code executed in the App getInitialProps. Sometimes, you just need to get the data the 1st time the user loads the page (device, user data, ...)

What should I do if the function fails?

Failures can and will always happen, so you should better prepare yourself to handle them. If you send a request to an API, the API could be temporarily down for example. Think about a fallback, and if you can't, shortcut the getInitialProps. You can throw an exception and catch it from the App so you know you can stop sending requests and render an error page.

Here is an example of how you can handle errors in your app.

// pages/app.tsx

class BaseApp extends App<Props> {

public static async getInitialProps({ Component, ctx }: AppContext) {

let pageProps, errorCode;

const { res, req, store } = ctx;

const client = req ? createServerClient(req) : createBrowserClient();

if (req) {

const service = new UserService(client);

try {

await store.dispatch(loadUser(service));

} catch (e) {

errorCode = getErrorCode(e);

logError(...);

}

try {

await store.dispatch(doSomething(service));

} catch (e) {

// We can live without this, we just log the error

logError(...);

}

}

// No need to fetch the page props if there is an error, as we'll render the error page

if (!errorCode && Component.getInitialProps) {

try {

pageProps = await Component.getInitialProps({ ...ctx, client, i18n, t: i18n.t.bind(i18n) });

} catch (e) {

errorCode = getErrorCode(e);

logError(...);

}

}

// Set the response status code

if (res && errorCode) {

res.statusCode = errorCode;

}

return { pageProps };

}

public render() {

const { Component, pageProps, errorCode, store } = this.props;

return (

<Provider store={store}>

<ThemeProvider theme={theme}>

{errorCode ? <Error statusCode={errorCode} /> : <Component {...pageProps} />}

</ThemeProvider>

</Provider>

);

}

}

Performance and resources

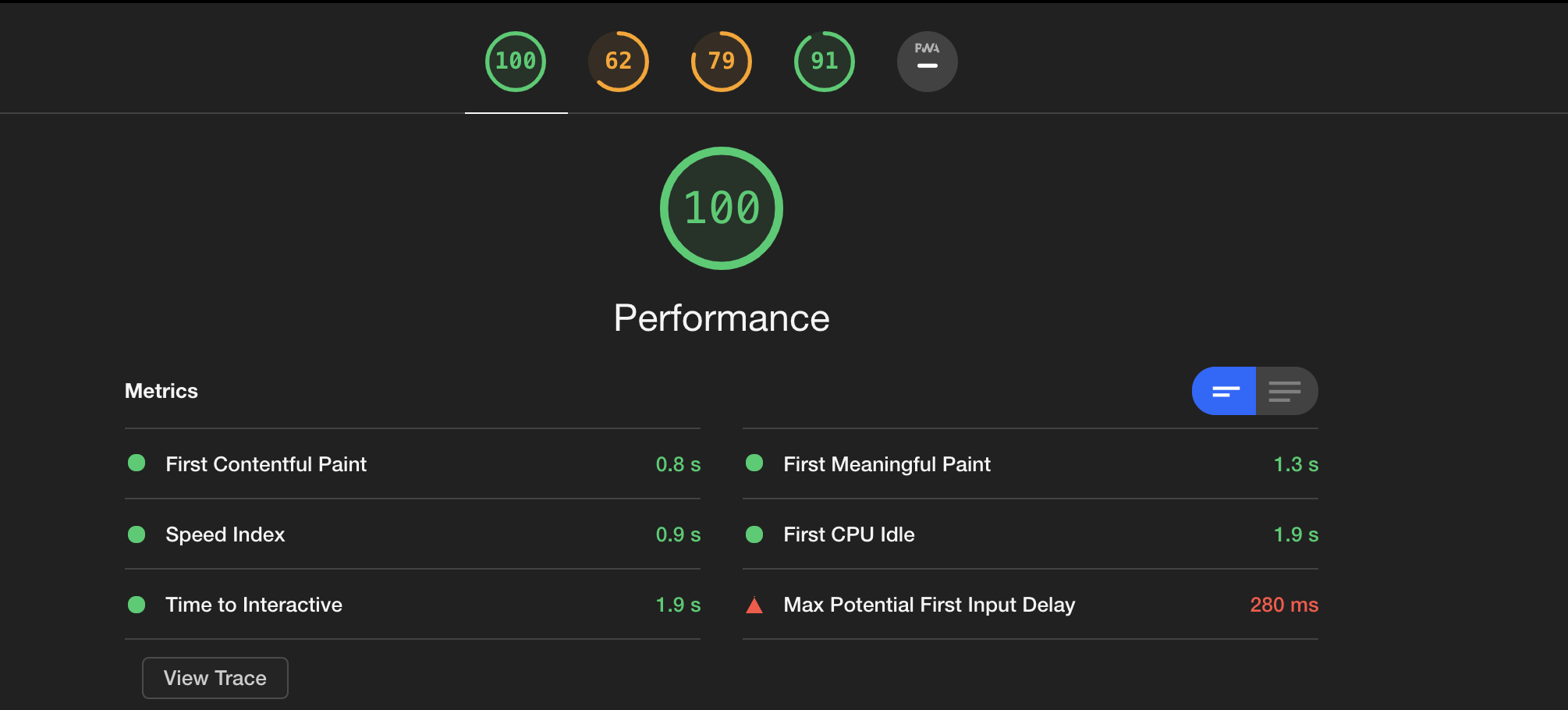

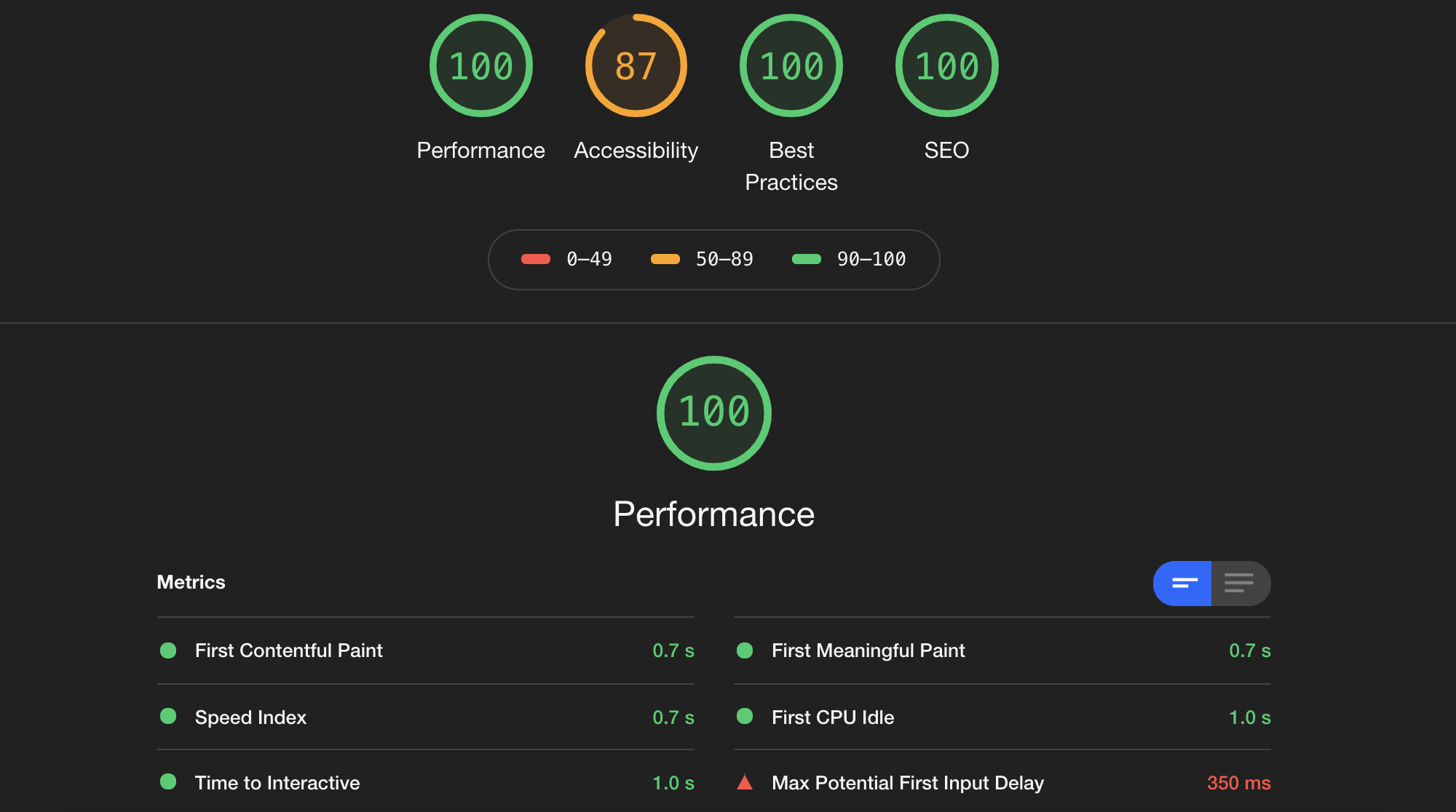

Before:

Now:

When we decided to go for SSR, no one knew what to expect in term of performance. We knew that SSR is slow because of its synchronicity.

Moreover, NextJS doesn't support the stream version of React.renderToString(), which helps you to render faster.

In the end, the response time is quite good and we are happy about it. All the speed metrics are much better with the new application than with PHP/AngularJS (Time to Interactive, First Meaningful Paint, etc).

The only thing is that because of NodeJS, the application needs a minimum of CPU. When we deployed the application we put CPU limits a bit low and the event loop started to lag a lot when the throughput was increasing. We adjusted a bit these limits and increased the probes timeouts on Kubernetes. Everything is working fine now, we have 3 pods running on our cluster and we haven't needed to scale more for now (because we haven't migrated all our pages, the throughput is still reasonable).

What is next?

Photo by salvatore ventura / Unsplash

Migrate more pages, of course! Other teams will join us in this effort, we'll deploy 4 new pages this quarter, and we'll continue the migration gradually during the year.

We also plan to cache some pages for crawlers, as component rendering is quite slow and takes resources.

We'd also like to play with Kubernetes autoscaling, to be more flexible and scale faster.

Thanks for reading!

We are looking for new talents, apply now!